Hand in Hand Challenge has come to a successful close. Hand in Hand Challenge is for designing barrier-free services for daily life, including public transport, education, cultural life and residence and professional fields with sign language data.

14 teams consisting of two to five team members interested in AI service development have participated in the event. All participants introduced various ideas for barrier-free AI services by utilizing sign language data such as public traffic reports, sign language translation and interpretation systems.

Let’s find out who won the prize in the intense competition.

First Prize – “Hand Cream” with the barrier-free public traffic report broadcast service

First of all, the grand prize was given to the “Hand Cream” team. This team members are the members of the big data union club ToBig’s.

Team Members

Uijeong Kang (Department of Information Security, Seoul Woman’s University), Miseong Kim (Department of Computer Science and Engineering, Seoul National University of Science and Technology), Doyeon Lee (Department of IT Engineering, Sookmyung Women’s University), Jeongeun Lee (Department of Data Science, Sejong University), Hyewon Cho (Department of Oriental Painter & Department of Information and Computer Engineering, Hongik University)

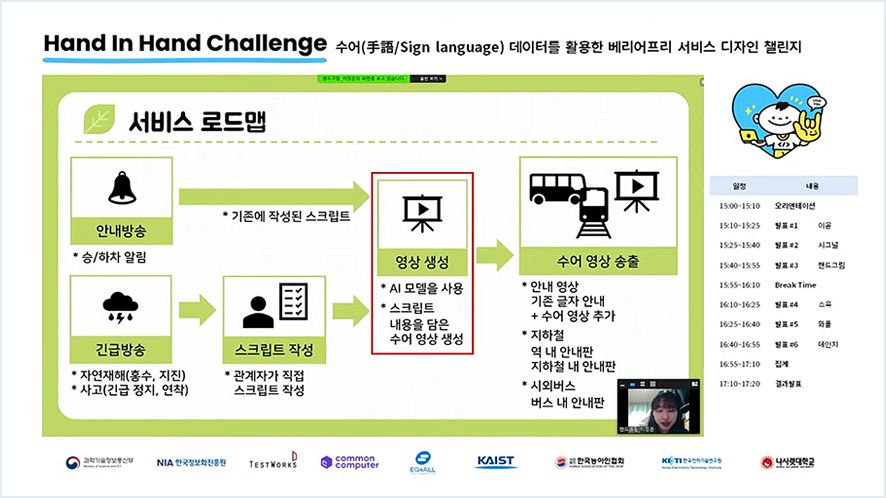

[“Hand Cream” team’s service road map online introduction session image]

“Hand Cream” team started with the idea that activating sign language translation as easy as google translation and suggested the sign language translation system for public transportation.

This team demonstrated strong competencies in diverse fields such as system configuration, advancement and application plan and model design. Hand Cream proudly took first place by suggesting sign language translation model structures and displaying their code as GitHub to confirm its substantiality.

[Photo of the Testworks’ CEO Dale Yoon and Hand Cream team during the awards ceremony]

“Most of all, I was glad to develop a helpful service for the society with sign language data during the contest. Moreover, my team won the grand prize, so this contest is a very memorable event for me.” – Jeongeun Lee

“I was interested in sign language translation and creation models and had been studying it even before this contest. The award is meaningful as it confirms that the sign language dataset and our sign language model can create essential values. The sense of accomplishment and joy will be the driving force for me to continue studying sign language models.” –Hyewon Cho

The second prize – “SSSG” with real-time sign language translation system with AR technology

Secondly, the next grand prize-winning team is “SSSG.” The team members are Korea University alumni. They suggested an AR sign language translation solution for government offices such as public institutions and public offices for hard-of-hearing people.

Team Members

Jinho Park (Korea University), Sojin Kim (Korea University), Seonpil Hwang (Korea University)

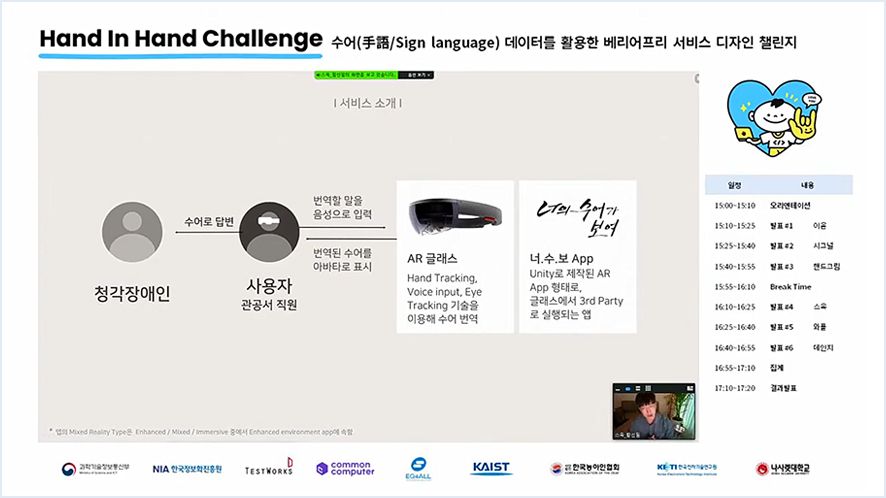

[“SSSG” team’s service online introduction session image]

This team’s AR sign language translation solution is advantageous as people can talk with others by looking at their eyes and facial expressions through AR glasses. Furthermore, this idea was praised because a person with disability does not have to buy the device as all necessary accessories can be mass-produced and distributed to all government offices. SSSG won second place with the great idea and practical AI technology.

[Photo of the Testworks’ CEO Dale Yoon and SSSG team during the awards ceremony]

“I had not been desperate recently, but during the contest, I felt my lifespan has shrunk and was terrified by the tight deadline. It was helpful for me to get over with mannerism and was a good opportunity to witness how we can commit to society with our capacity. With the better results than I thought, it is indescribable how happy I am.” – Seongpil Hwang

The Third Prize – “Signal” with sign language interpretation service system based on sign language learning system

Lastly, the third grand prize-winning team is “Signal.” This team suggested online education with sign and spoken languages to improve the quality of education for hard of hearing people and extend sign language class services.

Team Members

Hyewon Kim (Master’s degree program in marketing, School of Business, Konkuk University), Myeongsu Kim (Doctor’s degree program, AI Cloud-related research project in Georgia Institute of Technology)

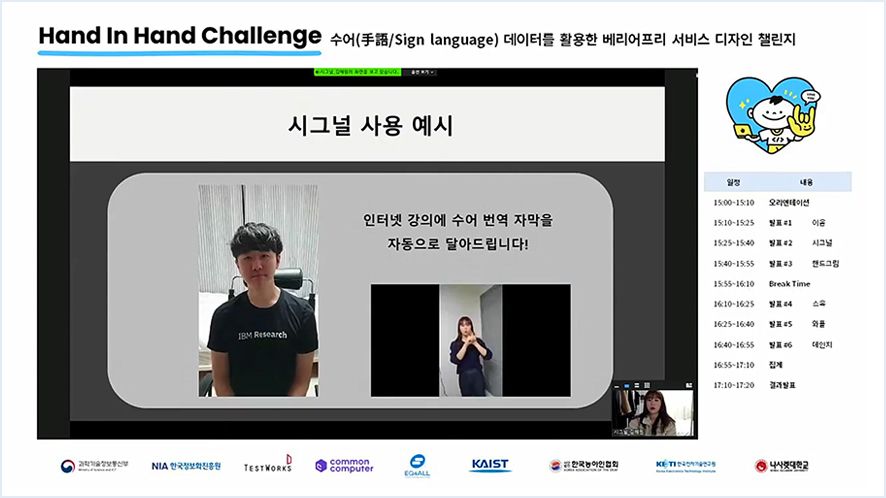

[“Signal” team’s example of using online introduction session image]

The “Signal” team’s service design based on a sign language learning system with AI and big data utilization was highly complimented as the learning data can continuously collect new data and be extended with web data collection and user feedback. Moreover, Signal approved the system’s potential by operating the system and demonstration and won the third prize.

[Photo of the Testworks’ CEO Dale Yoon and SSSG team during the awards ceremony]

“I will further develop this program with other developers for its realization in the future. I am eager to provide good quality service with AI technology that can turn discomfort to comfort. Thank you very much for recognizing the potential of “Signal”. I will improve this program so that it can be applied in more broad areas. It was a good chance for me to understand the importance of sign language. I hope for a barrierless world for all people with AI technology and service. Thank you.” – Hyewon Kim

We greatly appreciate all of your participation in Testworks’ Hand in Hand Challenge for barrier-free service design.

Testworks will grow into a pioneer company to create social values based on technology with your interest and support.