Summary

These days, LiDAR-based 3D AI technology is demonstrating performance quite comparable to that of 2D image-based AI. Due to this level of achievement, 3D data and processed datasets collected using LiDAR have become an another option of dataset that AI developers are looking to work with. However, LiDAR datasets are often complex to even look at, let alone process. This is because LiDAR equipment is still far too expensive, and LiDAR data itself is difficult to process, and it is not easy to directly build a dataset all alone by users. Furthermore, there are not many public datasets, which means that it is not easy to use open datasets. The development of AI technology is accelerating across various industries thanks to the Korean government’s Digital New Deal policy, but it is still difficult to get any LiDAR datasets, which are considered to be the core data for 3D AI.

Due to this reason, Testworks is exploring many options to build LiDAR datasets—the core data required for 3D AI. In this article, I would like to share my opinions on 3D AI and the development process of LiDAR dataset processing using Testworks’s 3D AI.

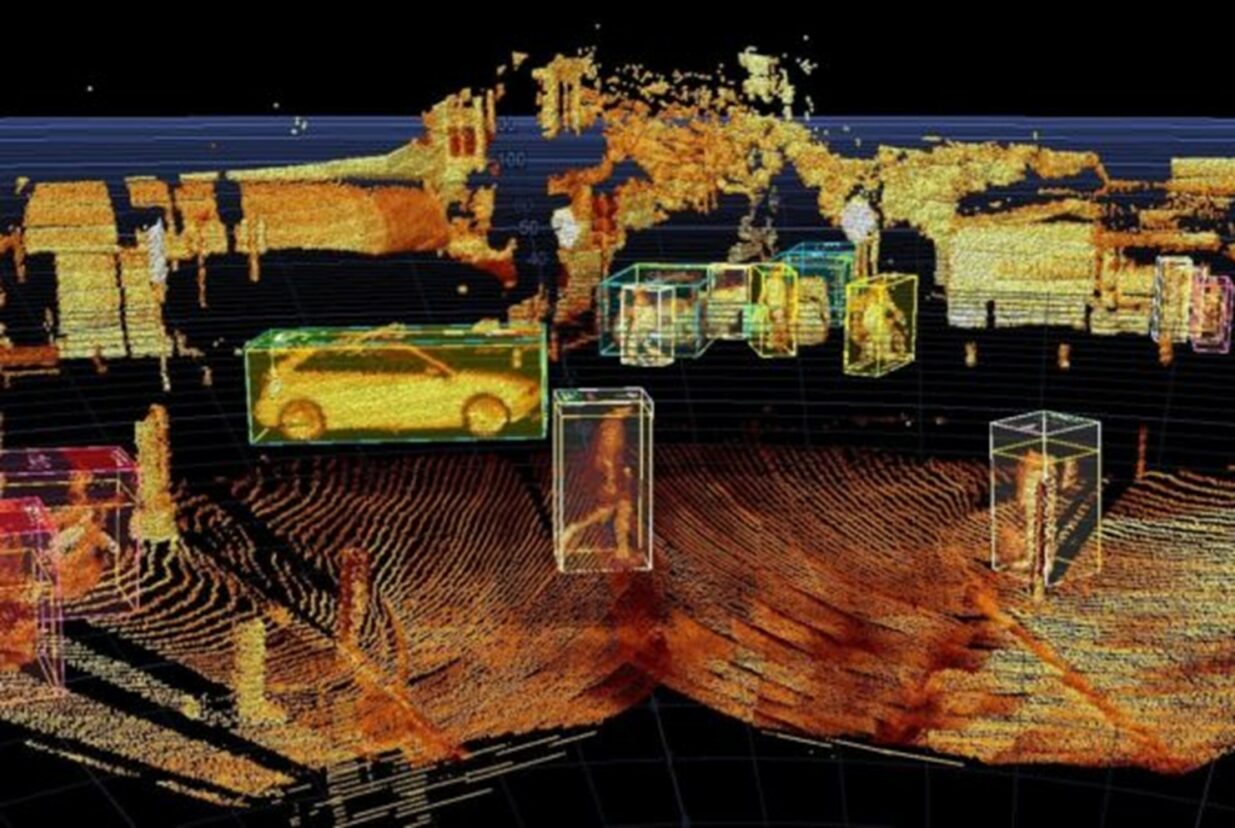

(Image provided by Innoviz)

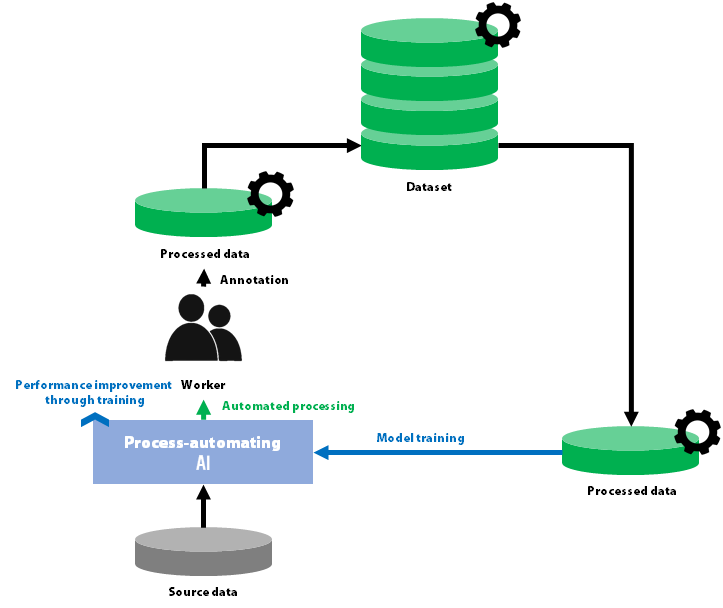

Automated Process of AI Dataset Processing

By using a well-trained AI model in automating dataset processing, the efficiency of data processing can be improved. Also called ‘auto-labeling’, this method is actually quite useful in building datasets. At the start, it is difficult to see the results of automation due to a lack of processed datasets, but once a high-quality dataset is in place, the performance of automatic processing and the efficiency of processing tasks will greatly improve. Once a high-quality dataset is built, centering around that, more datasets can be brought in a shorter period of time. That is, if a suitable AI model is used for each data, it facilitates the construction of the next datasets.

Since there are diverse types of data and the datasets, AI required for each industry will vary with no doubt. Therefore, developing and operating an automation model using a suitable AI model for all types of data requires a lot of research, analysis and development for the model. This is currently still in progress, and it is a very important task of mine as a researcher who focuses on AI. LiDAR data has reached a point where processing tools and automation are inevitable along with AI research. In this article, I will demonstrate the process of developing an automation model for processing LiDAR data.

Training 3D AI for Automatic Processing

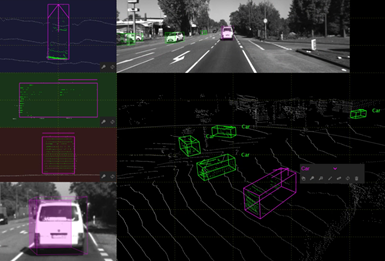

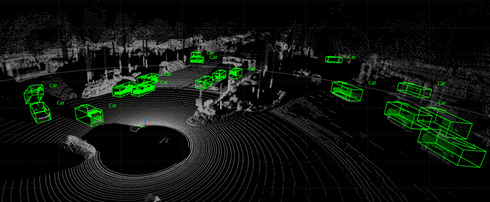

The processing of the dataset for training a 3D object detection model is done by processing a cuboid in the LiDAR data. As shown in [Fig 3] below, it first locates the area where the object exists in the data and then performs processing by determining the position, size and rotation of the cuboid. If these are processed one by one, it will take longer than processing an object in a 2D image.

To solve this issue, an automated cuboid processing test was conducted, to develop a 3D object detection model. In order to develop the automated processing model, an AI model was selected and then a dataset was selected to train the AI.

Selecting AI Model

Starting with VoxelNet released in 2017, the performance of 3D object detection models has improved significantly. Since then, similar to the image-based 2D object detection model, the 3D object detection model also became established to a certain extent, and as a result, several open source iterations have been released for convenience. As of 2021, OpenPCDet and SECOND are among the most popular tools. Between the two, the SECOND code was selected to conduct the test.

Selecting Dataset

Two types of LiDAR datasets were selected. One is an autonomous driving dataset that contains driving environment data, and the other is an indoor LiDAR dataset that contains indoor environment data.

In the past, LiDAR AI research and data collection was only conducted in the field of autonomous driving. As the result, most published datasets were found in autonomous driving field. The representative examples of this are the KITTI and Nuscene datasets, where processing is mainly focused on moving objects (e.g., vehicles, pedestrians, motorcycles, bicycles) that are found while driving. The KITTI dataset was selected as the autonomous driving dataset for the automated processing test.

(Image provided by cvlibs website)

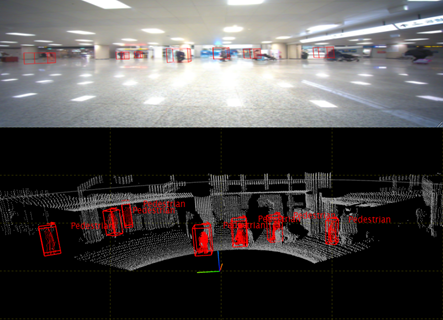

Luckily, in 2021, an indoor LiDAR and synchronization camera video dataset was released on AI Hub—one of the domestic AI platforms. This is a dataset with data collected by introducing a LiDAR sensor into an indoor environment with pedestrians, and it processes all moving objects including pedestrians. It would appear that this was in response to the field of 3D AI application recognizing the issue that it was limited to autonomous driving, resulting in an endeavor to expand its purposes and field of application. Although it is a somewhat strange dataset where a precedent is difficult to find, it is of good quality, and so it was selected as the indoor pedestrian dataset for the automated processing test.

(Image provided by AI Hub website)

Automated Processing Test Results

Autonomous Driving Dataset Test

The automated processing model that was trained with the KITTI dataset was tested first. Just like in the long-researched field of autonomous driving, it captures vehicle objects very well. Direction detection is also very accurate.

In order to verify if it is suitable for more practical applications, I proceeded to automate the processing of LiDAR data from other manufacturers other than the KITTI dataset. As the form of LiDAR data differs depending on the manufacturer and equipment specifications, there were concerns that automated processing performance might be degraded. Despite this, we confirmed that the location of objects was very accurately found and processed. It is expected that the automated processing of datasets collected from the field of autonomous driving will pose no issues.

Indoor Dataset Test

The automated processing model that was trained with the dataset from AI Hub was tested. It managed to capture the location of pedestrians, being on a par with the model trained with autonomous driving dataset. Direction detection performance is rather below expectations, but this will be resolved naturally with the development of more quality datasets from the corresponding field.

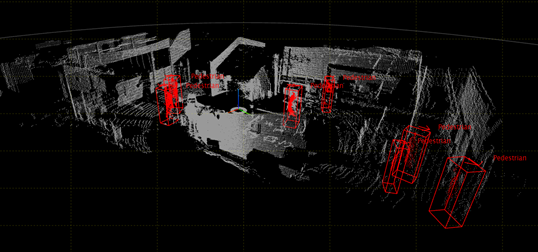

After collecting data from the company building using company-owned LiDAR equipment, the automated processing model was tested again. Due to the differences between the filming environment compared to the training dataset, there was a lot of unnecessary tagging of objects other than pedestrians, but performance was acceptable overall. The successful processing of the required object alone could increase the processing efficiency for human workers, and if the model is trained continuously with refined dataset, automation performance can be improved further.

Test Results

The results of the automated processing test on LiDAR datasets were more successful than initially expected. In both autonomous driving and indoor environments, objects were located and captured accurately, and a considerably high level of quality performance was demonstrated. The over-tagging phenomenon arising out of capturing of objects in unnecessary areas is expected to be improved significantly through the training of additional datasets in the future.

The LiDAR dataset itself was also quite positive in terms of quality. The advantage of currently published LiDAR datasets is that they are of good quality, despite the lack of quantity. Unlike 2D image data where object selection or area setting criteria can be ambiguous in each field of application, the object selection and area setting criteria of LiDAR data is very clear. Therefore, although the initial barrier for entry of data processing operations may be somewhat high, a little adaptation would allow for very intuitive and accurate processing. Perhaps in the future, the task of building high-quality datasets will be smoother than with other datasets.

Conclusion

Compared to the past, the development of AI technology is now driven by data rather than models. And as the endless are the fields of application for AI, so too are the types of data and processing. 3D AI and dataset construction using LiDAR data is expected to head in a more positive direction in the future.

Testworks is accelerating its own research in building various AI datasets in a more efficient manner. Through this research and development, we expect to verify the applications of various AI data and to continue to grow as an AI specialist all while promoting the creation of high-quality datasets.

Yesung Park

Researcher, AI R&D Team

Bachelor of Mechanical System Design Engineering, Hongik University

Master of Engineering, Department of Intelligent Robot Engineering, Hanyang University

He conducted robot research using AI at the Intelligent Robot Research Institute at Hanyang University. Since then, he has been interested in computer vision and deep learning and is currently working with Testworks AI R&D team. He is currently researching GAN and 3D AI.